Table of Contents

HW 01 - Quadruped locomotion using random search

Your first homework will be to write a neural network and optimization algorithm which makes a quadruped model move towards a given location. You will not be using any more advanced techniques like gradient descent in this case because the evaluation function is not differentiable with respect to the parameters. Instead you will implement a simple heuristic random search algorithm which will optimize the task. The point of this homework is to familiarize the student with formulating a problem as an optimization task and writing a very basic solver to solve it. You should get an intuition of how such a basic optimization process behaves and how it depends on various parameters.

This is a 'warmup' homework and will be introduced in the labs in the 3rd week. It uses your knowledge of numpy and the very basics of neural networks. Pytorch is not required for this homework but will be used heavily in the following weeks and the second homework will will be on image segmentation.

PyBullet

Pybullet is a dynamics simulation library in python which uses the well known Bullet physics engine. It's probably the simplest, easiest to install and most flexible library in python which can be used for robotic simulation.

The library and the installation process is described here in a bit more detail.

Quadruped Environment

You are given an environment which defines the quadruped. The environment defines everything that is required to simulate and render the robot. We will use a very similar API as OpenAI gym.

The most important parts are

- the input and output dimensions. These can be used to initialize your control algorithm. Note that we are using a generic 'black box' optimization algorithm in this case which is agnostic to the 'system' that we are controlling. This means that it assumes very little about the 'system'. The input and output dimensions and ranges are all that is required. The range is usually somewhere around [-1,1].

- next_obs, r, done, _ = step(ctrl) function. This function takes in the control action for the given time step and returns the next observation, the reward for taking action 'ctrl' and a boolean 'done' which shows if the episode has finished.

- obs = reset() function. This function resets the environment to a new episode which positions the robot to the initial state and resets the counters. It also returns the first observation with which you start the episode.

Our robot environment is defined in a single .py file and the body of the quadruped in a .urdf file. You are encouraged to go through all functions to understand how it works.

PYTHONPATH to point inside the hw_1 directory. Otherwise you will get import errors. Another solution is to manually modify the PYTHONPATH.

The quadruped model has 3 joints per leg. The observations are a concatenation of joint angles, torso quaternion and four binary leg contact points. The output is the target joint angle and has a dimension of 12, 1 for each joint. The model has an internal PID for each joint with parameters defined inside the model.

Your task

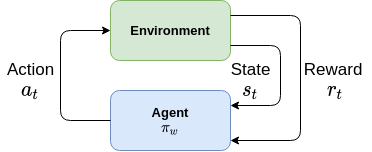

Design and train a neural network using numpy which makes the quadruped walk in the positive x direction with a given speed. You can formulate the quadruped environment as an MDP with state space $S$, action space $A$, transition function $s_{t+1} \sim T(s_t, a_t)$, reward function $r = F(s_t, a_t)$ and horizon $h$. We define a policy function $a_t \sim \pi_{w}(s_t)$ which maps states to actions and is parameterized by a vector $w$. The objective is to find a parameter vector $w$ which maximizes the cumulative reward.

$$ w^* = \underset{w}{\arg\max} \sum_{s_t \sim T, a_t \sim \pi_w} r(s_t, a_t) $$

This is done in episodes. The episode lasts a fixed amount of steps (horizon h) in our case, after which the robot is reset to its initial position.

You have to implement:

- A Policy class which maps observations to actions. In other words, it takes as input a given state of the quadruped (joint angles, body orientation, etc..) and outputs an action (target joint angles for all joints). To start with, you can use a simple linear (actually affine) mapping from state to action. But to get better results you can experiment with various architectures, additional features, etc. Note that the policy doesn't have to be a 'neural network' or linear policy. It can be some handcrafted algorithm. The only requirement is that it has learnable parameters which improve the policy. You can implement your policy in numpy. Pytorch is not required.

- An iterative random search algorithm which optimizes parameters of your policy to solve the task. In other words, the algorithm takes as input the environment and your parameterized policy function, aka control algorithm (neural network or whatever you use), and outputs parameters which make it perform good on the environment (in other words, maximize cumulative reward).

- (Optional) Modify the given reward function proxy which is given in the environment. You can shape the reward as you see fit to change the behavior of the robot. A better proxy also means better and faster optimization. Note: Why do we call it a 'proxy'? The actual reward function for a complicated legged robot gait is unknown. Instead we use something which we call a 'proxy', which is a function which tries to achieve the same goal and is relatively simple to describe and optimize. In our case, we want the robot to walk a certain direction at a given speed, so we can reward it for exactly that, having that speed in the given direction. You can try to instead maximize the reward for distance covered. It will give a different result. You can try make it jump by maximizing the cumulative height of the body, etc..

Random search algorithm

The method that you will be using to optimize the weights of your algorithm is essentially random search. This can be summarized using the following:

* step 1: Start with initial guess for solution (can be random) * step 2: Perturb solution with some element of stochasticity * step 3: Map solution onto your problem and evaluate it * step 4: If new solution is better than the last best, then new solution is a function of the old and perturbed solution * step 5: If satisfied, then finish, otherwise go to step 2

- Try different values for perturbation (epsilon) parameters

- Adapt epsilon according to progress

- Use two loops, coarse and fine grained epsilon

- Monitor weights and actions to see if anything is bad

- Run many iterations in parallel and kill after nth (prune early failures, continue just the good ones, or the best one)

- Try a better update rule (fit a distribution rather than just single entities)

- Try compute additional feature values for your policy (or just use a multi layered neural network. This might be more difficult to optimize)

- Try running many candidates before updating (batched version)

- Use evolutionary heuristics. Get N versions of current solution by perturbing each one slightly differently. Fit the new solution as a weighted estimate of the perturbed candidates, or by fitting a distribution.

- You can multithread your algo (1 thread=1 rollout)

Submission

- Delete any pytorch imports that you have in your project. The only image in brute that has pybullet doesn't have pytorch.

- Do the following change:

policy_path = os.path.join(os.path.dirname(os.path.realpath(__file__)), 'agents/quadruped_bbx.npy')in blackbox_opt.py

Otherwise just download the new template and copypaste your solution there. The upload in brute is being tested so if it doesn't work as planned, don't be alarmed, just write me an email.

Submit the whole project file as you download it. The agents folder should contain your trained agent and the PolicyNet class should contain your implementation of the control algorithm, which can be anything, as long as it has learnable parameters. It will be tested in a similar fashion

as the test function in blackbox_opt so make sure that your entire script, including the test function runs successfully.

hw_1/src/Lab_2/<code here>

Points

The reward function used to evaluate the environment will be the same one as given in the template.

- 5 points if your agent can achieve an average reward of 50

- 8 points if your agent can achieve an average reward of 70

The maximum achievable points for this homework will be 8 points.

Deadline

- Individual part: D + 14 days, 23:59 CEST, where D is a day of your tutorial where the homework is introduced (in 3rd week)

Every 24 hours after the deadline, you will lose 1 points. However, you will not gain a negative number of points, so the minimum is 0.