13 Classifiers II

We will focus on the assignment task. As a possible material we can also look at linear classifiers, an example of a classifier with an unknown probability distribution of data.

Linear classifier - example

In order to avoid guessing and probabilities, you can directly construct discrimination function of the following type:

$$g_s(\vec{x})=\vec{w}_s^{\top}\vec{x}+b_s$$

where $\vec{w}_s$ is a vector of the weights and the scalar $b_s$ is the translation on the y axis. Object $\vec{x}$ is placed into such a class $s$ that the value of the dicrimination fuction $g_s(\vec{x})$ is the highest from all the classes. Therefore teaching the classifier is basically an optimization task where we look for such parameters of the linear function that minimize a given criterion, for example the number of mistaken classifications on a multiset.

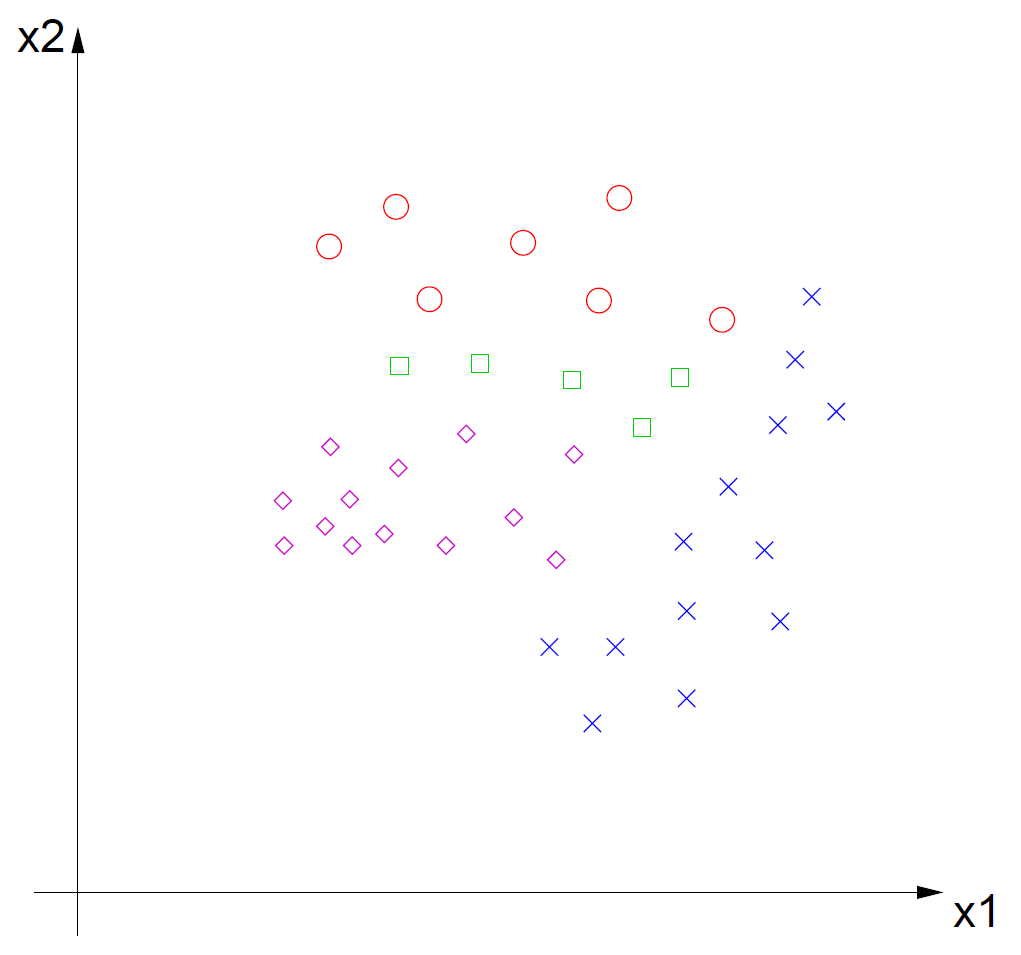

Is it possible to get zero classification errors on the given training multiset using only a linear classifier? If yes, draw the solution.

Figure 1: Training multiset in a 2D feature space and with 4 classes.